Abstract

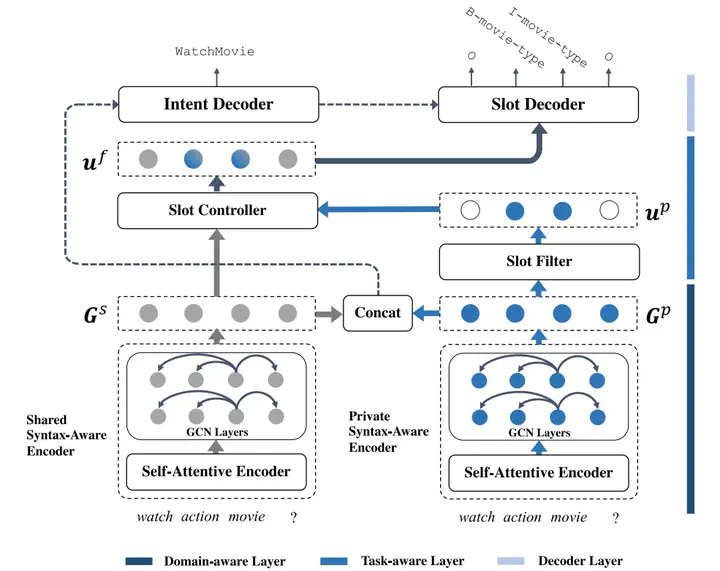

Spoken language understanding (SLU) has been addressed as a supervised learning problem, where a set of training data is available for each domain. However, annotating data for a new domain can be both financially costly and non-scalable. One existing approach solves the problem by conducting multi-domain learning where parameters are shared for joint training across domains, which is domain-agnostic and task-agnostic. In the article, we propose to improve the parameterization of this method by using domain-specific and task-specific model parameters for fine-grained knowledge representation and transfer. Experiments on five domains show that our model is more effective for multi-domain SLU and obtain the best results. In addition, we show its transferability when adapting to a new domain with little data, outperforming the prior best model by 12.4%. Finally, we explore the strong pre-trained model in our framework and find that the contributions from our framework do not fully overlap with contextualized word representations (RoBERTa).